Troubleshooting volume mount issues on Azure Container Apps

This post will cover troubleshooting issues seen when setting up volume mounts on Azure Container Apps

Overview

Currently, with Container Apps, you can mount two (3) different types of volumes:

- Ephemeral Volume

- Azure Files

- Secrets (preview)

Then there is the option to write to the local container filesystem. This option (container filesystem) and “Ephemeral” volumes are ephemeral overal and not designed to persist for long periods of time, since pods/replicas are ephemeral as well.

This post will cover Azure File mounts - since this option, generally compared to Ephemeral Volumes, introduces a few scenarios that can cause a mount operation to fail.

What behavior will I see

If a volume set up with Azure Files is unable to be mounted to the pod, you may see a few notable behaviors:

- Zero replicas. Additionally if going to the “Console” tab, it may also show “This revision is scaled to zero”. This is because the volume is mounted to a pod early on in the pod lifecycle - when this fails, the pod (or replicas) are never able to be created. Therefor, nothing is ever ran/started - and ultimately pods/replicas will show zero (0).

- In the Revisions blade it may show the Revision as “Scaled to 0”

- This will even be the case is minimum replica’s is set to >= 1.

- This concept can potentially be replicated in other environments with containers or Kubernetes - if trying to mount a volume that is invalid or inaccessible, you will likely see the container or pods can never be created to run.

- If browsing the application through its FQDN, a

stream timeoutwill occur - due to hitting the 240 second defined request duration ingress limit. - There may be no

stdoutinContainerAppConsoleLogs_CL- since a pod/replica is never running. - A Revision may indefinitely show as stuck in a “Provisioning state” or “Failed state”

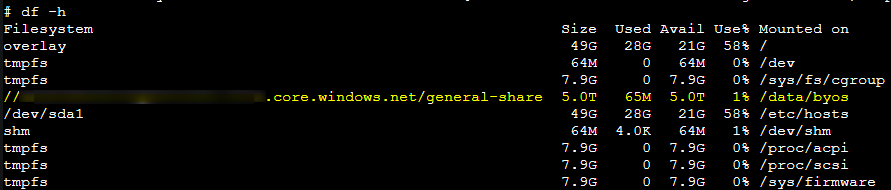

In a succesful scenario - you could connect to a pod/replica via the Console blade and run df -h to validate that the mount is seen, like below:

A note about dedicated environments:

For dedicated environments (Consumption profile, or, Workload profiles) - the error instead will show the following:

Container 'some-container' was terminated with exit code '' and reason 'VolumeMountFailure'

The description will not be logged as to why the failed to mount in this case, but, this blog can be used to rule out potential problems.

Troubleshooting

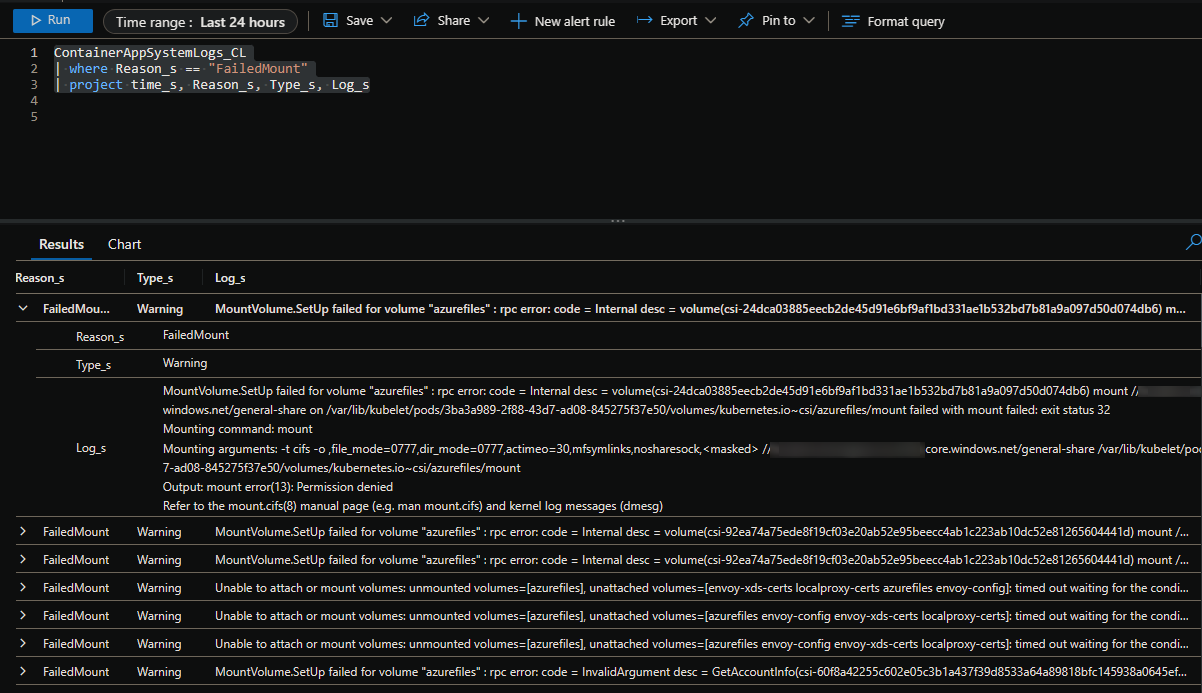

You can use the ContainerAppSystemLogs_CL table to view if failed mount operations are occurring.

An example of this query is:

ContainerAppSystemLogs_CL

| where Reason_s == "FailedMount"

| project time_s, Reason_s, Type_s, Log_s

If there is explicit failed mount operations, the output would look something like the below - in this case, it’s failingd due to firewall restrictions on the storage account side. The message may vary depending on the issue:

NOTE: There is some scenarios where a volume cannot be mounted, for instance, a misconfigured Service Endpoint on the Storage Account side - where the Storage Account can’t be contacted from the Container Apps side - this may not show any logging in the above table - and also have the same behavior as above in the What behavior will I see section.

Storage Volume troubleshooting scenarios

Documentation for using storage mounts in Azure Container Apps can be found here.

Common Errors

The below errors will show in ContainerAppSystemLogs_CL - this will depend on the scenario encountered:

Output: mount error(115): Operation now in progress- It is likely there is a UDR set that is causing traffic to storage to be blocked.

-

Output: mount error: could not resolve address for someaddress.file.core.windows.net: Unknown error- The Storage Account FQDN may not be able to be resolved - DNS may be misconfigured or unresolvable with the current DNS servers

- If there is a Private Endpoint on the Storage Account - ensure any Private DNS zones are properly configured. Misconfigured records/IP’s can cause this behavior

-

Output: mount error(13): Permission denied- The address is resolvable but traffic may be blocked from accessing storage. Is there a firewall on storage blocking the IPs of the environment? Is storage able to be accessed from a jumpbox in the same VNET?

- Blocking ports 443 and 445 (which Azure Files uses) will cause this behavior

- In general, the subnet of the Container App Environment needs access to the Storage Account

- Also ensure that the file share exists and that you’ve put in the correct access key when creating the Storage Resource initially on the Container App Environment.

- This can be caused by what’s described in Azure Files security compatability on Container Apps regarding incompatible security compatability settings on Azure Files.

- The address is resolvable but traffic may be blocked from accessing storage. Is there a firewall on storage blocking the IPs of the environment? Is storage able to be accessed from a jumpbox in the same VNET?

Output: mount error(2): No such file or directory- Does the File Share exist? Review if this was deleted or the name does not exist

Deleted File Share or Storage Account

If a File Share that is mapped to the application is deleted while the application is running, you may see some of the following scenarios:

- Read/write operations at runtime may fail, before any of the Pods are even restarted

- If using Console to access the Pod and running container:

- If volume will be removed shortly after the file share is deleted, you can validate this by running

df -hafter the deletion, you will notice the volume is now removed a few moments later

- If volume will be removed shortly after the file share is deleted, you can validate this by running

- If inside the mapped volume directory, trying to do any kind of operations on/in the volume that previously existed on the container file system may show the following:

ls: cannot open directory '.': No such file or directoryls: .: No error informationNo such file or directory

Trying to run df -h may also show df: /some/dir: No such file or directory where /some/dir is the location that the volume was previously mounted

Storage Firewall

If a firewall or a Private Endpoint is enabled on the Storage Account, and not properly set up for traffic, this can manifest in the following ways adversely on the application side:

- If enabling a Storage Firewall and the Container App has no access, and a restart or stop operation is attempted - it may show as indefinitely “Provisioning”, or in a failed provisioning state.

- Deployments may subsequently fail as the replica relies on this volume to be mounted.

- If connected to a replica using the Console option, the storage volume may disappear:

- If you were in the mounted directory at the time (assuming the same directory name did not exist otherwise), and try to do an operation on the file system - it may show

ls: cannot open directory '.': Host is down - If you try to run

df -hafter enabling a firewall where the application has no access - you may seedf: /some/path: Resource temporarily unavailable

- If you were in the mounted directory at the time (assuming the same directory name did not exist otherwise), and try to do an operation on the file system - it may show

- If a firewall was enabled, and the application does not have proper access, it may show as failed read/write operations to that mount at runtime.

- Operations such as restarts, updates, or deployments may fail if the application is dependent on a storage mount.

Consider the following during troubleshooting from with the Container App console:

- Ensure the account can be pinged with

tcpping- eg.,tcpping somestorageaccount.file.core.windows.net- test with both ports 443 and 445. You also use thenccommand, such asnc somestorageaccount.file.core.windows.net 445 - Ensure the address is resolvable - you can use either

digornslookup- eg.,nslookup somestorageaccount.file.core.windows.net- You can specify a DNS server with the

nslookupcommand by doingnslookup somestorageaccount.file.core.windows.net x.x.x.x

- You can specify a DNS server with the

- If the address can be resolved and be pinged, then this is likely not a DNS issue as it’s resolvable but rather this may indicate the client is blocked from access.

- If the address is not resolvable - this may be an issue with the DNS configured - you would also see

could not resolve address for someaddress.file.core.windows.net

Additionally, review Securing a custom VNET in Azure Container Apps with Network Security Groups

Incorrect or rotated Access Keys

Keys that are rotated/refreshed:

If you rotate an Access Key on the Storage Account side - the Azure Container App Storage resource will not automatically refresh this key for you. If a Container App is restarted after the key has been refreshed (but not yet updated) - operations such as restarts or deployments may fail and you will likely see a indefinite provisioning status for revision(s) reliant upon said Storage resource.

Update the Storage Resource mapped to the Azure Container Apps instance if keys are refreshed.

Permission denied due to volume privileges

Some technologies may require root privileges with sudo - or being able to manipulate the command and mount permissions used when mounting the volume with the client or driver being used.

You can do this on Azure Container Apps by following Container Apps - Setting storage directory permissions - this applies to both SMB and NFS.

Pod or container exceeded local ephemeral storage limit

NOTE: A specific blog post was created for this topic here - Pod ephemeral storage exceeded with Container Apps. This contains more specific troubleshooting in addition to what’s here.

You may see messages like the below when a pod is exceeding the allowed storage quota limit, which may cause application unavailability.

Container somecontainer exceeded its local ephemeral storage limit "1Gi".

Pod ephemeral local storage usage exceeds the total limit of containers 1Gi.

NOTE: The limit message may differ depending on the allowed quota

Ephemeral volume quota limits are publicly defined here.

For container storage - take note of what is publicly called out in documentation: There are no capacity guarantees. The available storage depends on the amount of disk space available in the container.

For epehemeral (pod) storage - review the containers’ CPU defined - since ephemeral storage scales with the amount of CPU set for a container.

If an application is consistently hitting quota limits, you can:

- Increase CPU size to be aligned with here, which would increase ephemeral storage.

- Or, use Azure Files which would offer increased storage size. However, if there are alot of temporary files or files that don’t need to be persisted - then this option shouldn’t be used as eventually you’d be at risk of filling up this Azure Files quota as well - unless these files are periodically/systematically deleted

- Alternatively, write/introduce logic to delete files that aren’t needed over time. This could incur a fair amount of developer work depending on the scenario.

Troubleshooting:

- To find used disk space, you can start with

df -aand see if the ephemeral mount has high usage - Notate the name in the

Container somecontainer exceeded its local ephemeral storage limit "1Gi".message, which can be seen inContainerAppSystemLogs/ContainerAppSystemLogs_CLor the Storage Mount Failures detector in the Diagnose and Solve Problems blade. If it’s the application container name, then it pretty much confirms something this application is doing is doing enough I/O and file/data/content creation to disk that it’s exceeded storage - In some cases, a Container App may not actually explicitly be defining

ephemeralStorage, but the above message still may be shown. Troubleshooting should still continue as normal, as it still means enough data is written to disk to exceed an implied storage limit for a pod

You should use a variety of df -a, du and find commands to figure out where storage may be used. With Container App Jobs, this may be harder, but this can possible be done programmatically through addtional dev work.

Some important tips:

- Always confirm if the appliication logic is explicitly writing files/content/etc. to certain directories. Then cross check these if your’re using

AzureFiles. You want to ensure if its correctly writing to a persistent location throughAzureFilesmounts if this is enabled - which can easily be checked in the Containers blade > Volume mounts on the Container App- As a real-world example, if the application is writing everything to

/opt/data/run-logic, but onlyAzureFilemounts for/opt/data/runand/mnt/certs, this means/opt/data/run-logicis being written to disk and therefor will take up ephemeral space

- As a real-world example, if the application is writing everything to

- At this point, disk usage needs to be investigated by the application owner(s). There are many various guides online on how to use commands to find a directory consuming the large space.

- If content is growing over time, you want to try to capture usage when it’s higher, which can also be tough to judge. Or else the timing of running commands may not look like anything is used, especially right after a new pod is created.

For example, to find the current “human readable” size of the current directory, you can use du -ksh .. If you want to target a specific directory, use du -ksh /path/to/dir:

[root@somereplica]# du -ksh .

12G .

[root@somereplica]# du -ksh /path/to/dir

12G /path/to/dir

You can also use other commands like this:

du -x -h --max-depth=2 / 2>/dev/null | sort -hr | head -n 50< Largest directories across the whole filesystemdu -sh * 2>/dev/null | sort -hr | head -n 20< Largest directories in the current directorydu -h --max-depth=2 2>/dev/null | sort -hr | head -n 30< Large directories from a recursive search in the current directory

Change -n to a higher number to list more output. With filesystems with large usage/many files, these commands may take a while to run.

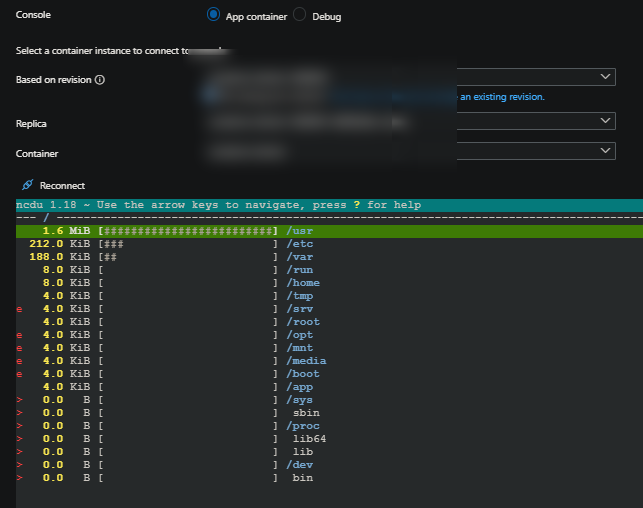

An interactive way to check usage is the ncdu command. This can be installed via the following:

- Debian/Ubuntu:

apt-get install ncdu -yy - Alpine:

apk add ncdu ncdu-doc

If you get Error opening terminal: unknown. when trying to invoke ncdu, run export TERM=xterm (or any relevant terminal). Then rerun the command

Just typing ncdu will load the terminal GUI and iterate over the file system and by default show usage from largest to least. There is additional flags that can be passed in. You can run ncdu -x / to start looking at it from the root filesystem. When the GUI loads, you can use your “right arrow key” to delve into each directory, which will show the space used for everything in that directory, and so on. Use the “left arrow key” to navigate back

IMPORTANT: All of these above commands are subjected to the application container. If they’re ran as non-root or do not have proper permissions to read into directories, some of these commands may fail or not show anything.