Container Apps: Troubleshooting image pull errors

This post will cover image pull errors and what they may mean on Azure Container Apps.

Overview

Image pull errors can happen for numerous reasons - these reasons may also depend on certain scenarios. These errors can also show or manifest in different places, depending on where you’re trying to deploy or change the image and/or tag.

General reasons that cause image pulls

General reasons that will cause an image pull is the creation of new pods/replicas. Such as, explicitly creating new revisions, scaling - or editing existing containers in a way that it is a part of Revision-scope changes.

This is important to understand - because in scenarios where registry credentials or networking changes where made and not correctly updated/validated to work on the Container App - it would cause the new revision (or existing ones) to be marked as failed since the image is now failing to pull.

Troubleshooting

Difference between message format in certain failures

When deploying or doing an update that would cause an image to be pulled (explained above) - depending on where and how this happens, the format for the error may be a bit different.

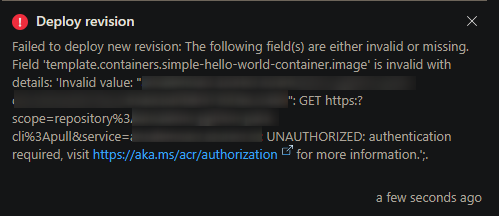

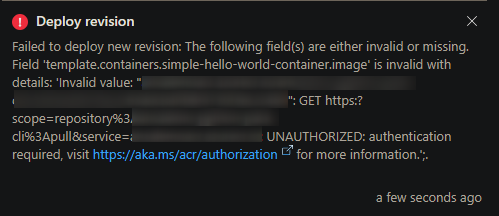

In GUI options, like making changes directly from the Azure Portal, it may open a popout message/toast that looks the below - however, this same style of message may appear in BICEP/ARM/other IaaC deployment methods, and others - just instead written out to the terminal/console:

When this fails, such as when doing changes through the portal (above), and you view your Log workspace, you’ll see this message:

Persistent Image Pull Errors for image "someregistry.com/image:atest". Please check https://aka.ms/cappsimagepullerrors.

The main reason for failure would be in the above popout message, or, in the other cases above with IaaC or CLI methods - written to the terminal.

In other scenarios where pod movement happens more organically - eg., scaling rules being triggered, or other reasons for pod movement that is not directly user initiated - this may manifest in the format below in the Logs / Log Analytic Workspace - notice the difference between the above and the below

Failed to pull image "someacr.azurecr.io/someimage:sometag": rpc error: code = Unknown desc = failed to pull and unpack image "someacr.azurecr.io/someimage:sometag": failed to resolve reference "someacr.azurecr.io/someimage:sometag": ...

Even though the formatting style may be different - you can still use the below common scenarios for troubleshooting.

Viewing logs

Log Analyics / Log Blade:

You can go to the Logs blade on your Container App and run the following query to look for all occurrences of image pulls.

ContainerAppSystemLogs_CL

| where RevisionName_s == "somerevision--f0wyhu0"

| where Log_s startswith "Pulling image"

or Log_s startswith "Successfully pulled image"

or Log_s startswith "Failed to pull image"

or Log_s == "Error: ImagePullBackOff"

or Log_s startswith "Persistent Image Pull Errors"

| project TimeGenerated, Log_s, RevisionName_s, ReplicaName_s

Take note of the ` ReplicaName_s column - as changes in replicas would indicate new pod/replicas being created. This can potentially also be correlated to events where ContainerAppSystemLogs_CL shows Created container [containername]` - indicating the container was created in the new pod/replica.

Log Stream:

You can use the Log Stream blade and switch it to “System” to view system logs there as well.

Common errors

Denying access to Managed Identity endpoints for image pull authentication

If using Managed Identity - and especially if using a VNET - you need to ensure that the endpoints listed here is allowed.

This may fail with an UNAUTHORIZED message, especially if doing net-new creations or updates from an external client to Azure Container Apps. The same points described below in the networking section apply to this scenario as well in terms of what can cause this issue:

- A UDR on a subnet to an NVA/Firewall blocking traffic (this is the most common)

- An outbound NSG rule blocking traffic

- Another form of misconfigured subnet to ACR/container registry integration

- Firewall on the target resource denying incoming traffic

You can use the same troubleshooting approach in the below networking section against these endpoints to ensure they’re able to be reached from the Container App Environment.

Authentication or Authorization related

A common error will be a 401 returned when trying to pull an image:

This can occur in some of the following scenarios:

- If you’re using Admin Credential (username/password) based authentication with your Azure Container Registry

- For Admin Credential scenarios - the Azure Container Registry key is stored as a Secret in the “Secrets” blade on the Container App - normally in the form of

acrnameazurecrio-acrname - If a key was rotated, or was changed to be incorrect, this can cause the 401 above

- For Admin Credential scenarios - the Azure Container Registry key is stored as a Secret in the “Secrets” blade on the Container App - normally in the form of

- If you’re using Managed Identity Authentication for the image pull and the identity does not have the

AcrPullrole assigned.

Failed to pull image "someacr.azurecr.io/image:tag": [rpc error: code = Unknown desc = failed to pull and unpack image "someacr.azurecr.io/image:tag": failed to resolve reference "someacr.azurecr.io/image:tag": failed to authorize: failed to fetch oauth token: unexpected status from GET request to https://someacr.azurecr.io/oauth2/token?scope=repository%3repo%image%3Apull&service=someacr.azurecr.io: 401 Unauthorized, rpc error: code = Unknown desc = failed to pull and unpack image "someacr.azurecr.io/iamge:tag": failed to resolve reference "someacr.azurecr.io/iamge:tag": failed to authorize: failed to fetch anonymous token: unexpected status from GET request to https://someacr.azurecr.io/oauth2/token?scope=repository%3repo%2Fimage%3Apull&service=someacr.azurecr.io: 401 Unauthorized]

A HTTP 403 could appear if a registry has a firewall enabled or a Private Endpoint set (this can potentially bleed into the “Network blocks or access issues” below)

Failed to pull image "someacr.azurecr.io/image:tag": rpc error: code = Unknown desc = failed to pull and unpack image "someacr.azurecr.io/image:tag": failed to resolve reference "someacr.azurecr.io/image:tag": unexpected status from HEAD request to https://someacr.azurecr.io/image:tag/v2/image/manifests/tag: 403 Forbidden

Other errors:

Failed to pull image "someacr.azurecr.io/someimage:sometag": rpc error: code = Unknown desc = failed to pull and unpack image "someacr.azurecr.io/someimage:sometag": failed to resolve reference "someacr.azurecr.io/someimage:sometag": pull access denied, repository does not exist or may require authorization: server message: insufficient_scope: authorization failed

Network blocks or access issues

In a networked environment, for example, using custom DNS, NSG rules and UDR’s, amongst others - this may cause pulls to fail if traffic is not properly resolving or routed to the registry, being blocked by another appliance or service along the way, or being blocked by the registry itself.

In these cases, it is good to review if:

- You can resolve the hostname of the container registry you’re trying to pull from. This is to validate if DNS can properly be looked up. Ensure if you need to use a jumpbox to validate this, that resolution is done from there.

- You can also try to test this within the Container App, as long as there is a running revision, by going to the Console tab which opens a

bash/shterminal

- You can also try to test this within the Container App, as long as there is a running revision, by going to the Console tab which opens a

- Review the kind of access on the registry side - is there a Firewall enabled or Private Endpoint set?

- In some cases, it is a good idea to validate the image pull is successful through public network access - which can scope this down to the networked environment.

- Take into consideration any routing (UDRs), VNETs (peered or not) - which both of those are only valid to be used on a Dedicated + Consumption SKU, and restrictions like “select networks”/Firewalls and Private Endpoints on the Azure Container Registry side

- For NSG’s, review Securing a custom VNET in Azure Container Apps with Network Security Groups

- For UDR’s and NAT gateway integrations - see Azure Container Apps - Networking - Routes

Common networking related errors are:

DNS related:

Common themes may be:

- Custom DNS servers unable to resolve the registry name

- Generally misconfigured custom DNS server

- Misconfigured Private DNS zones on Azure Container Registry

- Mistyped registry named or registry doesn’t exist

- Misconfigured Private Endpoint or records

Failed to pull image "someacr.azurecr.io/someimage:sometag": rpc error: code = Unknown desc = failed to pull and unpack image "someacr.azurecr.io/someimage:sometag": failed to resolve reference "someacr.azurecr.io/someimage:sometag": failed to do request: Head "https://someacr.azurecr.io/someimage:sometag/v2/someimage/manifests/sometag": dial tcp: lookup someacr.azurecr.io on 168.63.129.16:53: no such host

Failed to pull image "someacr.azurecr.io/someimage:sometag": rpc error: code = Unknown desc = failed to pull and unpack image "msomeacr.azurecr.io/someimage:sometag": failed to resolve reference "someacr.azurecr.io/someimage:sometag": unexpected status from HEAD request to https://someacr.azurecr.io/v2/someimage/manifests/sometag: 503 Service Unavailable

You can troubleshoot these kinds of issues in two general ways. Either from another active/running Container App - or - from a Virtual Machine attached to the same subnet.

For troubleshooting from a different Container App:

- You can look into using Container Apps - Debug console, which may be a much easier approach

- Installing troubleshooting tools completely depends on the image distribution and/or if outbound internet traffic to install packages is allowed.

- To test name resolution to the resource, use tools like

digornslookup. If you want to test with different DNS servers, you can pass alternative servers such asnslookup someacr.azurecr.io 8.8.8.8

For troubleshooting from a VM in the subnet:

- Ensure the VM is added to the subnet

- You can install the rest of the above tooling to test name resolution

Failed to pull image "someacr.azurecr.io/someimage:sometag": rpc error: code = Unknown desc = failed to pull and unpack image "someacr.azurecr.io/someimage:sometag": failed to resolve reference "someacr.azurecr.io/someimage:sometag": failed to do request: Head "https://someacr.azurecr.io/someimage:sometag/v2/someimage/manifests/sometag": dial tcp: lookup someacr.azurecr.io on 168.63.129.16:53: no such host

Failed to pull image "someacr.azurecr.io/someimage:sometag": rpc error: code = Unknown desc = failed to pull and unpack image "someacr.azurecr.io/someimage:sometag": failed to resolve reference "someacr.azurecr.io/someimage:sometag": failed to do request: Head "https://someacr.azurecr.io/someimage:sometag/v2/image/manifests/tag": dial tcp: lookup someacr.azurecr.io: i/o timeout

Failed to pull image "someacr.azurecr.io/someimage:sometag": rpc error: code = Unknown desc = failed to pull and unpack image "msomeacr.azurecr.io/someimage:sometag": failed to resolve reference "someacr.azurecr.io/someimage:sometag": unexpected status from HEAD requ

Failed to pull image "someacr.azurecr.io/someimage:sometag": rpc error: code = Unknown desc = failed to pull and unpack image "someacr.azurecr.io/someimage:sometag": failed to resolve reference "someacr.azurecr.io/someimage:sometag": failed to do request: Head "https://someacr.azurecr.io/someimage:sometag/v2/image/manifests/tag": dial tcp: lookup some

NOTE: i/o timeout could potentially be DNS related - but also proxy related, which is why it’s shown below

Outbound networking related:

The below errors could potentially be caused by:

- A UDR on a subnet to an NVA/Firewall blocking traffic

- An outbound NSG rule blocking traffic

- Another form of misconfigured subnet to ACR/container registry integration

- Firewall on the target resource denying incoming traffic

Failed to pull image "someacr.azurecr.io/someimage:sometag": rpc error: code = Unknown desc = failed to pull and unpack image "someacr.azurecr.io/someimage:sometag": failed to resolve reference "someacr.azurecr.io/someimage:sometag": failed to do request: Head "https://someacr.azurecr.io/someimage:sometag/v2/image/manifests/tag": dial tcp: lookup someacr.azurecr.io: i/o timeout

Failed to pull image "someacr.azurecr.io/someimage:sometag": rpc error: code = Unknown desc = failed to pull and unpack image "someacr.azurecr.io/someimage:sometag": failed to copy: read tcp xx.x.x.xxx:xxxxx->xx.xxx.xx.xxx:xxx: read: connection reset by peer

Failed to pull image "someacr.azurecr.io/someimage:sometag": rpc error: code = Unknown desc = failed to pull and unpack image "someacr.azurecr.io/someimage:sometag": failed to copy: httpReadSeeker: failed open: failed to do request: Get "httpsomeacr.azurecr.io/someimage:sometag": net/http: TLS handshake timeout

Failed to pull image "someacr.azurecr.io/someimage:sometag": rpc error: code = Canceled desc = failed to pull and unpack image "someacr.azurecr.io/someimage:sometag": failed to resolve reference "someacr.azurecr.io/someimage:sometag": failed to do request: Head "someacr.azurecr.io/someimage:sometag": context canceled

DENIED: client with IP 'xx.xx.xxx1.xx' is not allowed access, refer https://aka.ms/acr/firewall to grant access'

You can troubleshoot these kinds of issues in the same two general ways mentioned above.

For troubleshooting from a different Container App:

- You can look into using Container Apps - Debug console, which may be a much easier approach

- Installing troubleshooting tools completely depends on the image distribution and/or if outbound internet traffic to install packages is allowed.

- To test connectivity to a target resource, you can use tools like

nc,tcppping- as well ascurl- If using

nc, passing certain flags may make it seem like there is no connection. Ensure you also pass the proper port. eg.nc -vz someacr.azurecr.io 443

- If using

For troubleshooting from a VM in the subnet:

- Ensure the VM is added to the subnet

- You can install the rest of the above tooling to test connectivity

Missing, incorrect tag/image name

If the image and/or tag that you’re targeting does not exist in the registry you’re trying to pull from, then they may manifest as the below errors.

If this is the case ensure that the:

- Image exists and is correctly spelled

- Tag exists and is correctly spelled

Sometimes it may be good to test if these images are able to be pulled to your local machine.

Some errors you may see are below:

Failed to pull image "someacr.azurecr.io/someimage:sometag": [rpc error: code = NotFound desc = failed to pull and unpack image "someacr.azurecr.io/someimage:sometag": failed to resolve reference "someacr.azurecr.io/someimage:sometag": someacr.azurecr.io/someimage:sometag: not found, rpc error: code = Unknown desc = failed to pull and unpack image "someacr.azurecr.io/someimage:sometag": failed to resolve reference "someacr.azurecr.io/someimage:sometag": failed to authorize: failed to fetch anonymous token: unexpected status from GET request to https://someacr.azurecr.io/oauth2/token?scope=repository%3Asomeimage%3Apull&service=someacr.azurecr.io: 401 Unauthorized]

Failed to pull image "someacr.azurecr.io/someimage:sometag": rpc error: code = Unknown desc = failed to pull and unpack image "someacr.azurecr.io/someimage:sometag": failed to resolve reference "someacr.azurecr.io/someimage:sometag": pull access denied, repository does not exist or may require authorization: server message: insufficient_scope: authorization failed

'Invalid value: "somehost.com/someimage:tag": GET https:: unexpected status code 404 Not Found:

Common scenarios

ACR Access Keys rotated but not updated on the Container App

If Azure Container Registry Access Keys are rotated, but not updated on the Container App, this can cause failures to pull images when doing operations that initiate a pull - such as creating a new revision or scaling out. In the portal, you would see this (in other tools, like cli based deployment tools - it would be propagated via stdout):

NOTE: You can still restart and existing running revision

If these keys are rotated, the client(s) (ex., Azure Container Apps) have no awareness this was changed on the ACR side - up until point of failure.

To change/validate the keys:

- Go to the Secrets blade and click edit (or click the eye icon to view the current value)

- If the key does not match what is showing as either the primary or secondary key in Azure Container Registry, then the key on the Container App side needs to be updated.

- Copy a key from ACR and edit -> save in the Azure Container Apps portal

- Reattempt whatever operation was tried before that failed.

NOTE: Given the above, if there was replica/pod movement (such as due to a revision creation), and the ACR secrets were rotated at some point previously - this would fail to pull the image when trying to create the new pod/replicas